Epoch AI has received insights from Fields Medal winners Terence Tao and Timothy Gowers on their challenging FrontierMath problems. Tao highlighted that solving these problems typically requires a blend of expertise, suggesting the involvement of graduate students and advanced AI tools. Unlike traditional math competitions, which often avoid specialized knowledge, FrontierMath leverages it by requiring complex calculations and providing easily verified answers. Mathematician Evan Chen compared FrontierMath to other competitions, noting its unique focus on computational power. The organization plans to routinely test AI models against this benchmark and will introduce more sample problems in the future to support the research community.

Recently, Epoch AI engaged renowned mathematicians Terence Tao and Timothy Gowers to review a set of difficult math problems known as FrontierMath. Tao noted that these problems are incredibly challenging, stating that the best way to tackle them right now might involve teaming a graduate student with relevant expertise and advanced AI tools.

The FrontierMath problems are designed to be checked automatically, allowing for answers that can either be exact numbers or complex math objects. To keep things interesting, the problems are crafted to be “guessproof,” meaning they require large numerical answers or complex solutions, making random correct guesses nearly impossible.

Mathematician Evan Chen shared insights on his blog about the unique structure of FrontierMath compared to traditional math competitions. Unlike competitions like the International Mathematical Olympiad, which focus on creative thinking without needing specialized knowledge, FrontierMath embraces complexity and requires participants to have a solid understanding of advanced math concepts. Chen highlighted that while traditional problems emphasize creativity, FrontierMath is about computational power and algorithm implementation.

Epoch AI has plans for ongoing evaluations of AI models using this benchmark and will be releasing more sample problems soon to help the research community continue testing their systems.

In summary, the collaboration between human expertise and modern AI technologies in tackling FrontierMath showcases an exciting intersection of mathematics and artificial intelligence.

Relevant Tags: Epoch AI, FrontierMath, Terence Tao, Timothy Gowers, Evan Chen, math problems, artificial intelligence, research.

-

What is the new math benchmark all about?

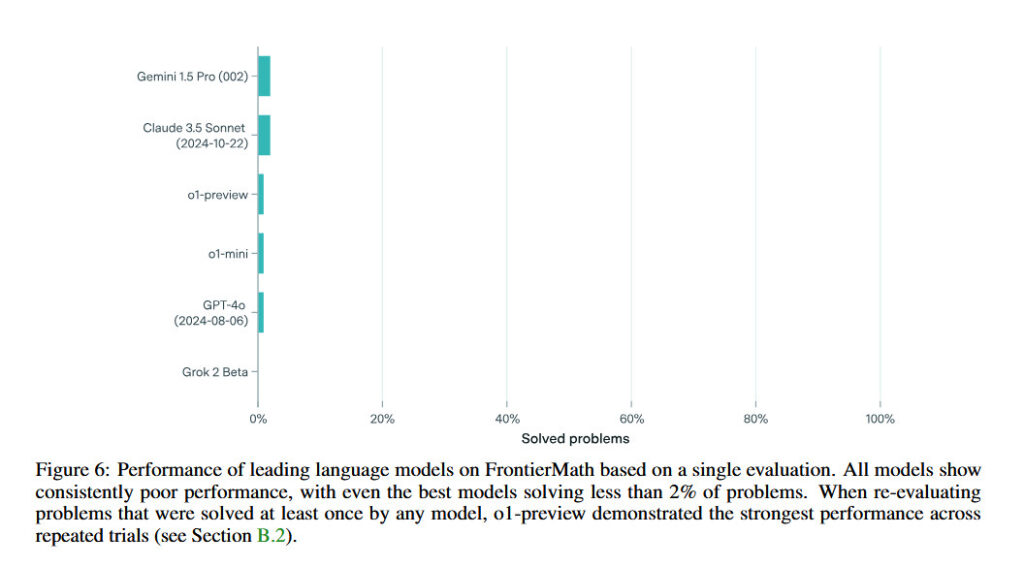

The new math benchmark tests complex math problems that are challenging for both AI and human experts, pushing the limits of their understanding. -

Why is this benchmark important?

It helps researchers see how well AI can perform in math compared to skilled humans, showing both strengths and weaknesses of current technologies. -

Who can take this benchmark?

Anyone with a strong interest in math, whether it’s students, researchers, AI developers, or math enthusiasts, can attempt it. -

How does this benchmark differ from other tests?

This benchmark focuses on unique and tricky problems that require deep thinking and creativity, rather than just solving standard equations. - What can we learn from the results of this benchmark?

The results will help us understand how AI learns and solves problems, and may guide improvements in AI development and math education.